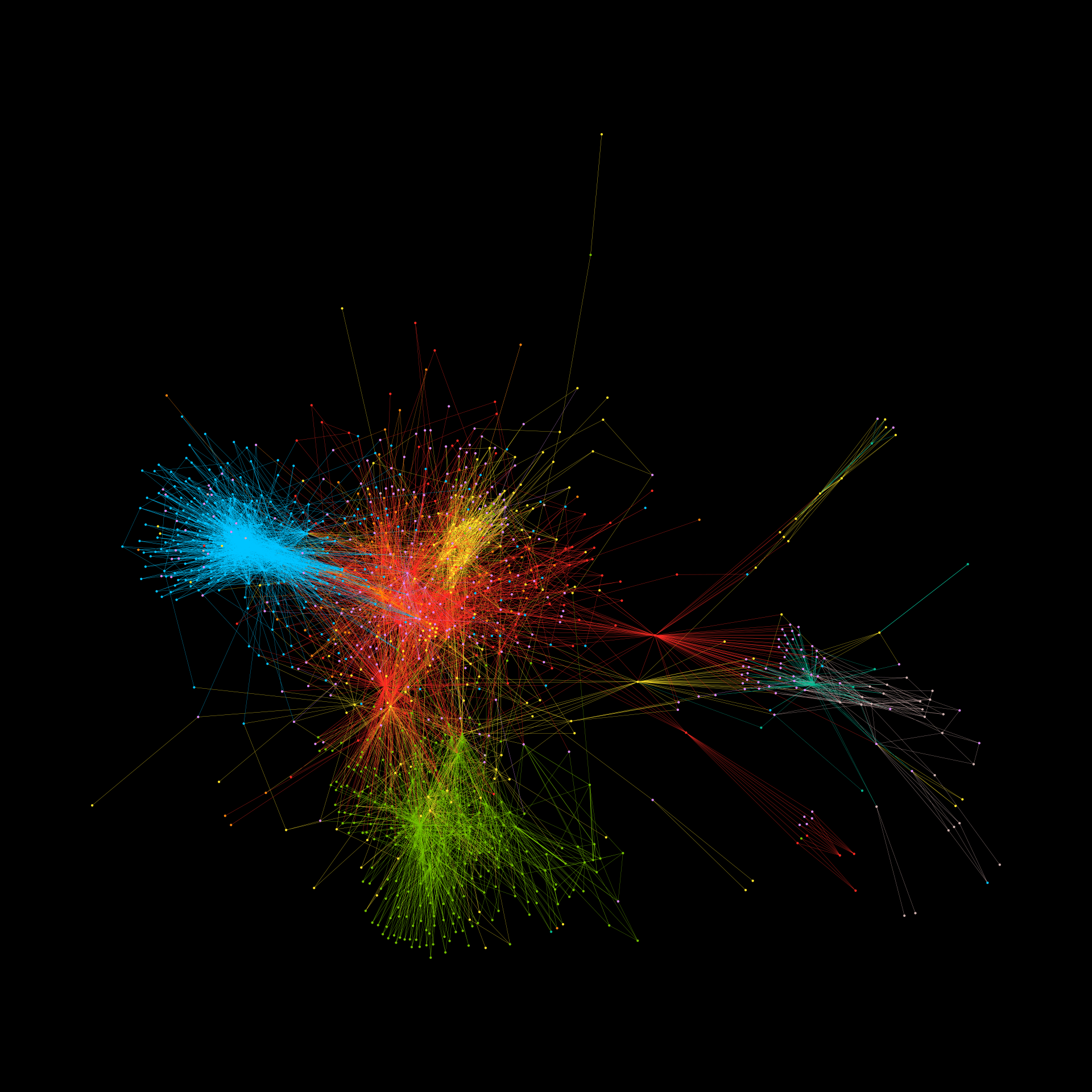

We derive a first-principles physics theory of the AI engine at the heart of LLMs’ ‘magic’ (e.g. ChatGPT, Claude): the basic Attention head. The theory allows a quantitative analysis of outstanding AI challenges such as output repetition, hallucination and harmful content, and bias (e.g. from training and fine-tuning). Its predictions are consistent with large-scale LLM outputs. Its 2-body form suggests why LLMs work so well, but hints that a generalized 3-body Attention would make such AI work even better. Its similarity to a spin-bath means that existing Physics expertise could immediately be harnessed to help Society ensure AI is trustworthy and resilient to manipulation.

Frank Yingjie Huo, Neil F. Johnson