- Bypassing Covert Resilience in Contentious Online Networks

SIAM News

Tragic acts of terrorism—such as February’s mass stabbing in Austria by a 23-year-old Syrian asylum seeker who was allegedly radicalized online by the Islamic State —accentuate the dangers of radicalization via the internet. Terrorist organizations exploit popular social media platforms to advance their ideology-driven agendas through recruitment, fundraising, and the spread of propaganda — all of which ultimately causes severe harm in communities around the world. From a national security perspective, this drive towards radicalization raises pressing questions about our ability to monitor, quantify, understand, predict, and even mitigate such efforts before they materialize as tragedies.

- Physics reveals and explains patterns in conflict casualties

Europhysics Letters

Why humans fight has no easy answer. However, understanding better how humans fight could inform future humanitarian aid planning and insight into hidden shifts for peace efforts. Here we show that an empirically-grounded physics theory of fighter dynamics — which is a generalization of the well-known physics of polymer assembly — can explain casualty patterns observed across decades of violence in a current conflict hotspot. It also suggests the possibility of future ‘super-shock’ surprise attacks that are even more lethal than have already been seen. These insights from physics open the door to new policy discussions surrounding humanitarian aid and peace efforts that account mechanistically for human violence across scales.

Frank Yingjie Huo, Dylan Restrepo, Pedro Manrique, Gordon Woo, Neil Johnson

- Influence of Twitter social network graph topologies on traditional and meme stocks during the 2021 GameStop short squeeze

NPJ Complexity

In early 2021, groups of Reddit and Twitter users collaborated to raise the price of GameStop stock from $20 to $400 in a matter of days. The heavy influence of social media activity on the rise of GameStop prices can be contrasted with the muted social media influence on other, more traditional stocks. While traditional stocks are modeled quite successfully by current methods, such methods break down when used to model these so-called meme stocks. Our project analyzes the graph topology of retweet graphs built from GameStop-related tweets and other meme stocks to find that the clustering coefficient and network diameter of a retweet graph can be used to decrease the mean absolute error of meme stock trading volume predictions by as much as 46% over the control group during the first 70 trading days of 2021.

- Coevolution of network and attitudes under competing propaganda machines

NPJ Complexity

Politicization of the COVID-19 vaccination debate has lead to a polarization of opinions regarding this topic. We present a theoretical model of this debate on Facebook. In this model, agents form opinions through information that they receive from other agents with flexible opinions and from politically motivated entities such as media or interest groups. The model captures the co-evolution of opinions and network structure under similarity-dependent social influence, as well as random network re-wiring and opinion change. We show that attitudinal polarization can be avoided if agents (1) connect to agents all across the opinion spectrum, (2) receive information from many sources before changing their opinions, (3) frequently change opinions at random, and (4) frequently connect to friends of friends. High Kleinberg authority scores among politically motivated media and two network components that are comparable in size can indicate the onset of attitudinal polarization.

Mikhail Lipatov, Lucia Illari, Neil Johnson, Sergey Gavrilets

- Multispecies Cohesion: Humans, Machinery, AI, and Beyond

Physical Review Letters

The global chaos caused by the July 19, 2024 technology meltdown highlights the need for a theory of what large-scale cohesive behaviors—dangerous or desirable—could suddenly emerge from future systems of interacting humans, machinery, and software, including artificial intelligence; when they will emerge; and how they will evolve and be controlled. Here, we offer answers by introducing an aggregation model that accounts for the interacting entities’ inter- and intraspecies diversities. It yields a novel multidimensional generalization of existing aggregation physics. We derive exact analytic solutions for the time to cohesion and growth of cohesion for two species, and some generalizations for an arbitrary number of species. These solutions reproduce—and offer a microscopic explanation for—an anomalous nonlinear growth feature observed in various current real-world systems. Our theory suggests good and bad “surprises” will appear sooner and more strongly as humans, machinery, artificial intelligence, and so on interact more, but it also offers a rigorous approach for understanding and controlling this.

Frank Yingjie Huo, Pedro Manrique, Neil Johnson

- How U.S. Presidential elections strengthen global hate networks

NPJ Complexity

Local or national politics can be a catalyst for potentially dangerous hate speech. But with a third of the world’s population eligible to vote in 2024 elections, we need an understanding of how individual-level hate multiplies up to the collective global scale. We show, based on the most recent U.S. presidential election, that offline events are associated with rapid adaptations of the global online hate universe that strengthens both its network-of-networks structure and the types of hate content that it collectively produces. Approximately 50 million accounts in hate communities are drawn closer to each other and to a broad mainstream of billions. The election triggered new hate content at scale around immigration, ethnicity, and antisemitism that aligns with conspiracy theories about Jewish-led replacement. Telegram acts as a key hardening agent; yet, it is overlooked by U.S. Congressional hearings and new E.U. legislation. Because the hate universe has remained robust since 2020, anti-hate messaging surrounding global events (e.g., upcoming elections or the war in Gaza) should pivot to blending multiple hate types while targeting previously untouched social media structures.

- Prior work shows same main takeaway

Science eLetters

This short eLetter shows that our earlier published studies precede the recently claimed breakthrough in use of automated/software/AI-based deliberation to soften extremes of opinions.

- Non-equilibrium physics of multi-species assembly applied to fibrils inhibition in biomolecular condensates and growth of online distrust

Scientific Reports

Self-assembly is a key process in living systems—from the microscopic biological level (e.g. assembly of proteins into fibrils within biomolecular condensates in a human cell) through to the macroscopic societal level (e.g. assembly of humans into common-interest communities across online social media platforms). The components in such systems (e.g. macromolecules, humans) are highly diverse, and so are the self-assembled structures that they form. However, there is no simple theory of how such structures assemble from a multi-species pool of components. Here we provide a very simple model which trades myriad chemical and human details for a transparent analysis, and yields results in good agreement with recent empirical data. It reveals a new inhibitory role for biomolecular condensates in the formation of dangerous amyloid fibrils, as well as a kinetic explanation of why so many diverse distrust movements are now emerging across social media. The nonlinear dependencies that we uncover suggest new real-world control strategies for such multi-species assembly.

Pedro Manrique, Frank Yingjie Huo, Sara El Oud, Neil Johnson

- Nonlinear spreading behavior across multi-platform social media universe

Chaos: An Interdisciplinary Journal of Nonlinear Science

Understanding how harmful content (mis/disinformation, hate, etc.) manages to spread among online communities within and across social media platforms represents an urgent societal challenge. We develop a non-linear dynamical model for such viral spreading, which accounts for the fact that online communities dynamically interconnect across multiple social media platforms. Our mean-field theory (Effective Medium Theory) compares well to detailed numerical simulations and provides a specific analytic condition for the onset of outbreaks (i.e., system-wide spreading). Even if the infection rate is significantly lower than the recovery rate, it predicts system-wide spreading if online communities create links between them at high rates and the loss of such links (e.g., due to moderator pressure) is low. Policymakers should, therefore, account for these multi-community dynamics when shaping policies against system-wide spreading.

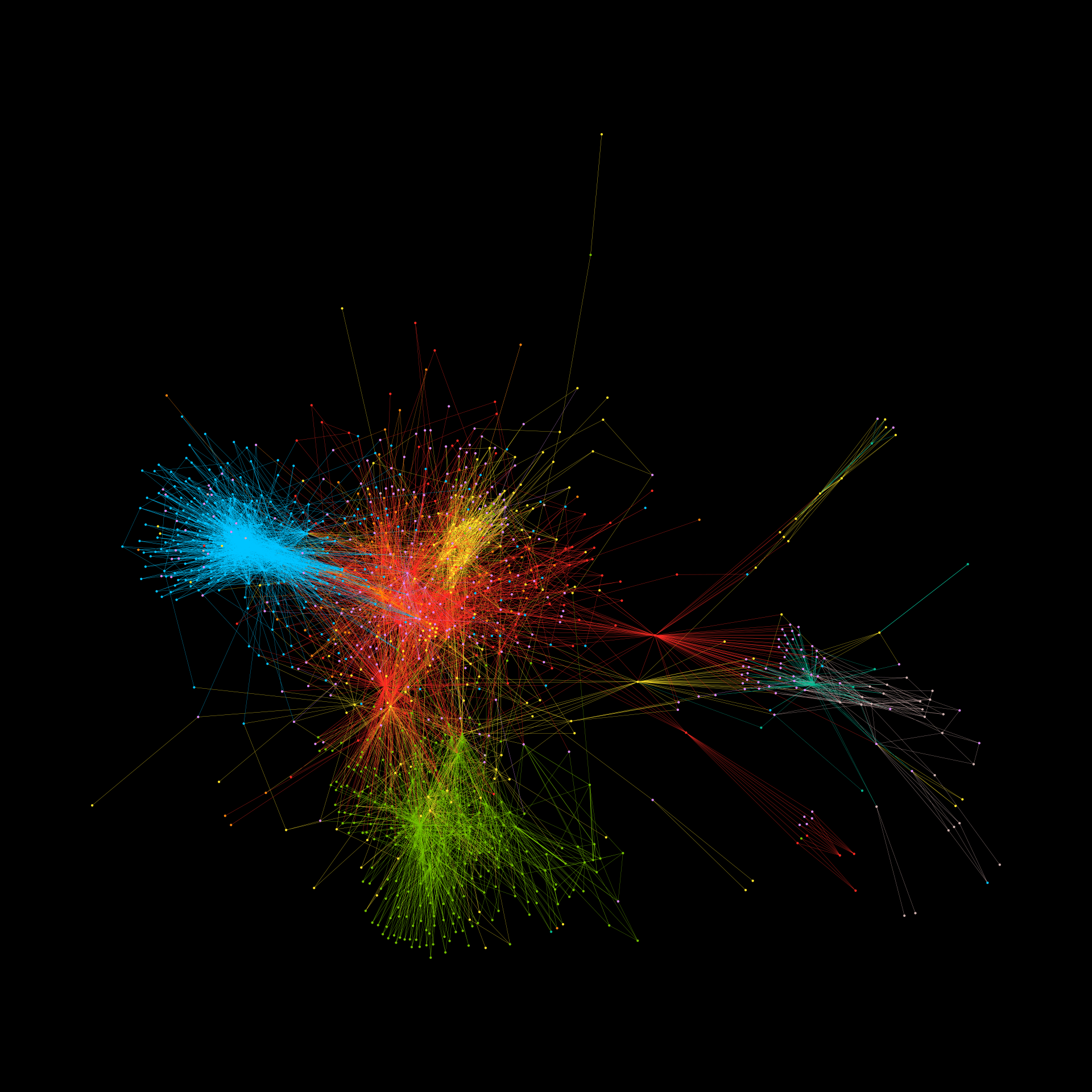

- Adaptive link dynamics drive online hate networks and their mainstream influence

NPJ Complexity

Online hate is dynamic, adaptive— and may soon surge with new AI/GPT tools. Establishing how hate operates at scale is key to overcoming it. We provide insights that challenge existing policies. Rather than large social media platforms being the key drivers, waves of adaptive links across smaller platforms connect the hate user base over time, fortifying hate networks, bypassing mitigations, and extending their direct influence into the massive neighboring mainstream. Data indicates that hundreds of thousands of people globally, including children, have been exposed. We present governing equations derived from first principles and a tipping-point condition predicting future surges in content transmission. Using the U.S. Capitol attack and a 2023 mass shooting as case studies, our findings offer actionable insights and quantitative predictions down to the hourly scale. The efficacy of proposed mitigations can now be predicted using these equations.

Minzhang Zheng, Richard Sear, Lucia Illari, Nicholas Restrepo, Neil Johnson

- Softening online extremes using network engineering

IEEE Access

The prevalence of dangerous misinformation and extreme views online has intensified since the onset of Israel-Hamas war on 7 October 2023. Social media platforms have long grappled with the challenge of providing effective mitigation schemes that can scale to the 5 billion-strong online population. Here, we introduce a novel solution grounded in online network engineering and demonstrate its potential through small pilot studies. We begin by outlining the characteristics of the online social network infrastructure that have rendered previous approaches to mitigating extremes ineffective. We then present our new online engineering scheme and explain how it circumvents these issues. The efficacy of this scheme is demonstrated through a pilot empirical study, which reveals that automatically assembling groups of users online with diverse opinions, guided by a map of the online social media infrastructure, and facilitating their anonymous interactions, can lead to a softening of extreme views. We then employ computer simulations to explore the potential for implementing this scheme online at scale and in an automated manner, without necessitating the contentious removal of specific communities, imposing censorship, relying on preventative messaging, or requiring consensus within the online groups. These pilot studies provide preliminary insights into the effectiveness and feasibility of this approach in online social media settings.

- Controlling bad-actor-artificial intelligence activity at scale across online battlefields

PNAS Nexus

We consider the looming threat of bad actors using artificial intelligence (AI)/Generative Pretrained Transformer to generate harms across social media globally. Guided by our detailed mapping of the online multiplatform battlefield, we offer answers to the key questions of what bad-actor-AI activity will likely dominate, where, when — and what might be done to control it at scale. Applying a dynamical Red Queen analysis from prior studies of cyber and automated algorithm attacks, predicts an escalation to daily bad-actor-AI activity by mid-2024 — just ahead of United States and other global elections. We then use an exactly solvable mathematical model of the observed bad-actor community clustering dynamics, to build a Policy Matrix which quantifies the outcomes and trade-offs between two potentially desirable outcomes: containment of future bad-actor-AI activity vs. its complete removal. We also give explicit plug-and-play formulae for associated risk measures.

Neil Johnson, Richard Sear, Lucia Illari

- Complexity of the online distrust ecosystem and its evolution

Frontiers in Complex Systems

Collective human distrust—and its associated mis/disinformation—is one of the most complex phenomena of our time, given that approximately 70% of the global population is now online. Current examples include distrust of medical expertise, climate change science, democratic election outcomes—and even distrust of fact-checked events in the current Israel-Hamas and Ukraine-Russia conflicts.

Lucia Illari, Nicholas J. Restrepo, Neil Johnson

- Inductive detection of influence operations via graph learning

Scientific Reports

Influence operations are large-scale efforts to manipulate public opinion. The rapid detection and disruption of these operations is critical for healthy public discourse. Emergent AI technologies may enable novel operations that evade detection and influence public discourse on social media with greater scale, reach, and specificity. New methods of detection with inductive learning capacity will be needed to identify novel operations before they indelibly alter public opinion and events. To this end, we develop an inductive learning framework that: (1) determines content- and graph-based indicators that are not specific to any operation; (2) uses graph learning to encode abstract signatures of coordinated manipulation; and (3) evaluates generalization capacity by training and testing models across operations originating from Russia, China, and Iran. We find that this framework enables strong cross-operation generalization while also revealing salient indicators-illustrating a generic approach which directly complements transductive methodologies, thereby enhancing detection coverage.

Nicholas Gabriel, David Broniatowski, Neil Johnson

- Explaining conflict violence in terms of conflict actor dynamics

Scientific Reports

We study the severity of conflict-related violence in Colombia at an unprecedented granular scale in space and across time. Splitting the data into different geographical regions and different historically-relevant periods, we uncover variations in the patterns of conflict severity which we then explain in terms of local conflict actors’ different collective behaviors and/or conditions using a simple mathematical model of conflict actors’ grouping dynamics (coalescence and fragmentation). Specifically, variations in the approximate scaling values of the distributions of event lethalities can be explained by the changing strength ratio of the local conflict actors for distinct conflict eras and organizational regions. In this way, our findings open the door to a new granular spectroscopy of human conflicts in terms of local conflict actor strength ratios for any armed conflict.

Katerina Tkacova, Annette Idler, Neil Johnson, Eduardo López

- Energy transfer in N-component nanosystems enhanced by pulse-driven vibronic many-body entanglement

Scientific Reports

The processing of energy by transfer and redistribution, plays a key role in the evolution of dynamical systems. At the ultrasmall and ultrafast scale of nanosystems, quantum coherence could in principle also play a role and has been reported in many pulse-driven nanosystems (e.g. quantum dots and even the microscopic Light-Harvesting Complex II (LHC-II) aggregate). Typical theoretical analyses cannot easily be scaled to describe these general N-component nanosystems; they do not treat the pulse dynamically; and they approximate memory effects. Here our aim is to shed light on what new physics might arise beyond these approximations. We adopt a purposely minimal model such that the time-dependence of the pulse is included explicitly in the Hamiltonian. This simple model generates complex dynamics: specifically, pulses of intermediate duration generate highly entangled vibronic (i.e. electronic-vibrational) states that spread multiple excitons – and hence energy – maximally within the system. Subsequent pulses can then act on such entangled states to efficiently channel subsequent energy capture. The underlying pulse-generated vibronic entanglement increases in strength and robustness as N increases.

Fernando Gómez-Ruiz, Oscar Acevedo, Ferney Rodríguez, Luis Quiroga, Neil Johnson

- Cavity-induced switching between Bell-state textures in a quantum dot

Physical Review B

Nanoscale quantum dots in microwave cavities can be used as a laboratory for exploring electron-electron interactions and their spin in the presence of quantized light and a magnetic field. We show how a simple theoretical model of this interplay at resonance predicts complex but measurable effects. New polariton states emerge that combine spin, relative modes, and radiation. These states have intricate spin-space correlations and undergo polariton transitions controlled by the microwave cavity field. We uncover novel topological effects involving highly correlated spin and charge density that display singlet-triplet and inhomogeneous Bell-state distributions. Signatures of these transitions are imprinted in the photon distribution, which will allow for optical read-out protocols in future experiments and nanoscale quantum technologies.

Santiago Steven Beltrán Romero, Ferney Rodriguez, Luis Quiroga, Neil Johnson

- Rise of post-pandemic resilience across the distrust ecosystem

Scientific Reports

Why does online distrust (e.g., of medical expertise) continue to grow despite numerous mitigation efforts? We analyzed changing discourse within a Facebook ecosystem of approximately 100 million users who were focused pre-pandemic on vaccine (dis)trust. Post-pandemic, their discourse interconnected multiple non-vaccine topics and geographic scales within and across communities. This interconnection confers a unique, system-level (i.e., at the scale of the full network) resistance to mitigations targeting isolated topics or geographic scales—an approach many schemes take due to constrained funding. For example, focusing on local health issues but not national elections. Backed by numerical simulations, we propose counterintuitive solutions for more effective, scalable mitigation: utilize “glocal” messaging by blending (1) strategic topic combinations (e.g., messaging about specific diseases with climate change) and (2) geographic scales (e.g., combining local and national focuses).

Lucia Illari, Nicholas Johnson Restrepo, Neil Johnson

- Shockwavelike Behavior across Social Media

Physical Review Letters

Online communities featuring “anti-X” hate and extremism, somehow thrive online despite moderator pressure. We present a first-principles theory of their dynamics, which accounts for the fact that the online population comprises diverse individuals and evolves in time. The resulting equation represents a novel generalization of nonlinear fluid physics and explains the observed behavior across scales. Its shockwavelike solutions explain how, why, and when such activity rises from “out-of-nowhere,” and show how it can be delayed, reshaped, and even prevented by adjusting the online collective chemistry. This theory and findings should also be applicable to anti-X activity in next-generation ecosystems featuring blockchain platforms and Metaverses.

Pedro Manrique, Frank Yingjie Huo, Sara El Oud, Minzhang Zheng, Lucia Illari, and Neil Johnson

- Stochastic Modeling of Possible Pasts to Illuminate Future Risk

Oxford Academic

Disasters are fortunately uncommon events. Far more common are events that lead to societal crises, which are notable in their impact, but fall short of causing a disaster. Such near-miss events may be reimagined through stochastic modeling to be worse than they actually were. These are termed downward counterfactuals. A spectrum of reimagined events, covering both natural and man-made hazards, are considered. Included is a counterfactual version of the Middle East Respiratory Syndrome (MERS). Attention to this counterfactual coronavirus in 2015 would have prepared the world better for COVID-19.

Gordon Woo, Neil Johnson