Simplifying Complexity

In our last episode, Neil Johnson explained how there was an underlying power law with a slope of 1.8 that described the number of casualties that occur in wars.

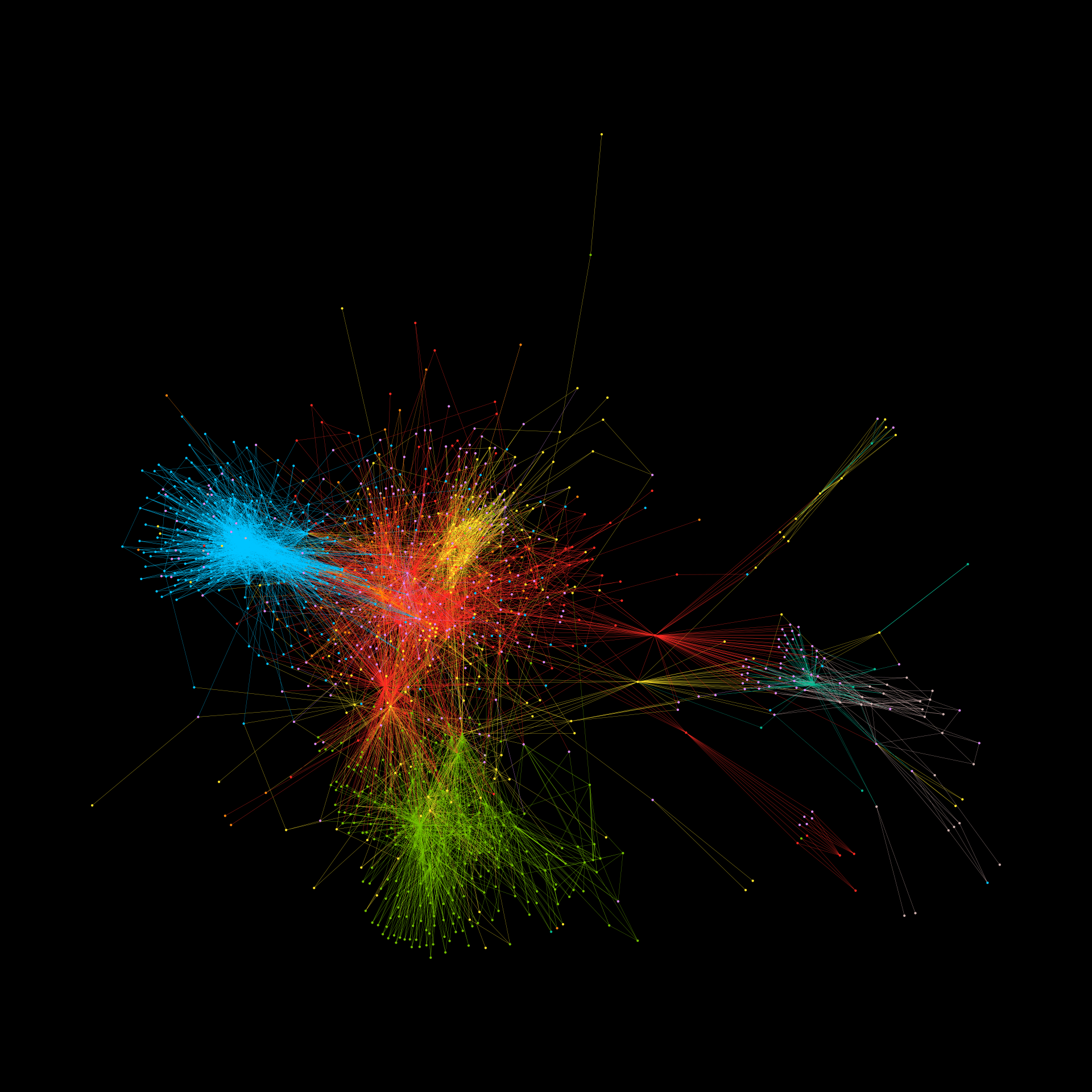

Today’s episode digs deeper into where this power law comes from, the route that Neil’s research took to explain it, and how the arrival of the internet finally provided the missing datasets required to understand the underlying structure of something seemingly as chaotic as war.

Neil is Professor of Physics and Head of the Dynamic Online Networks Lab at George Washington University.