Europhysics Letters

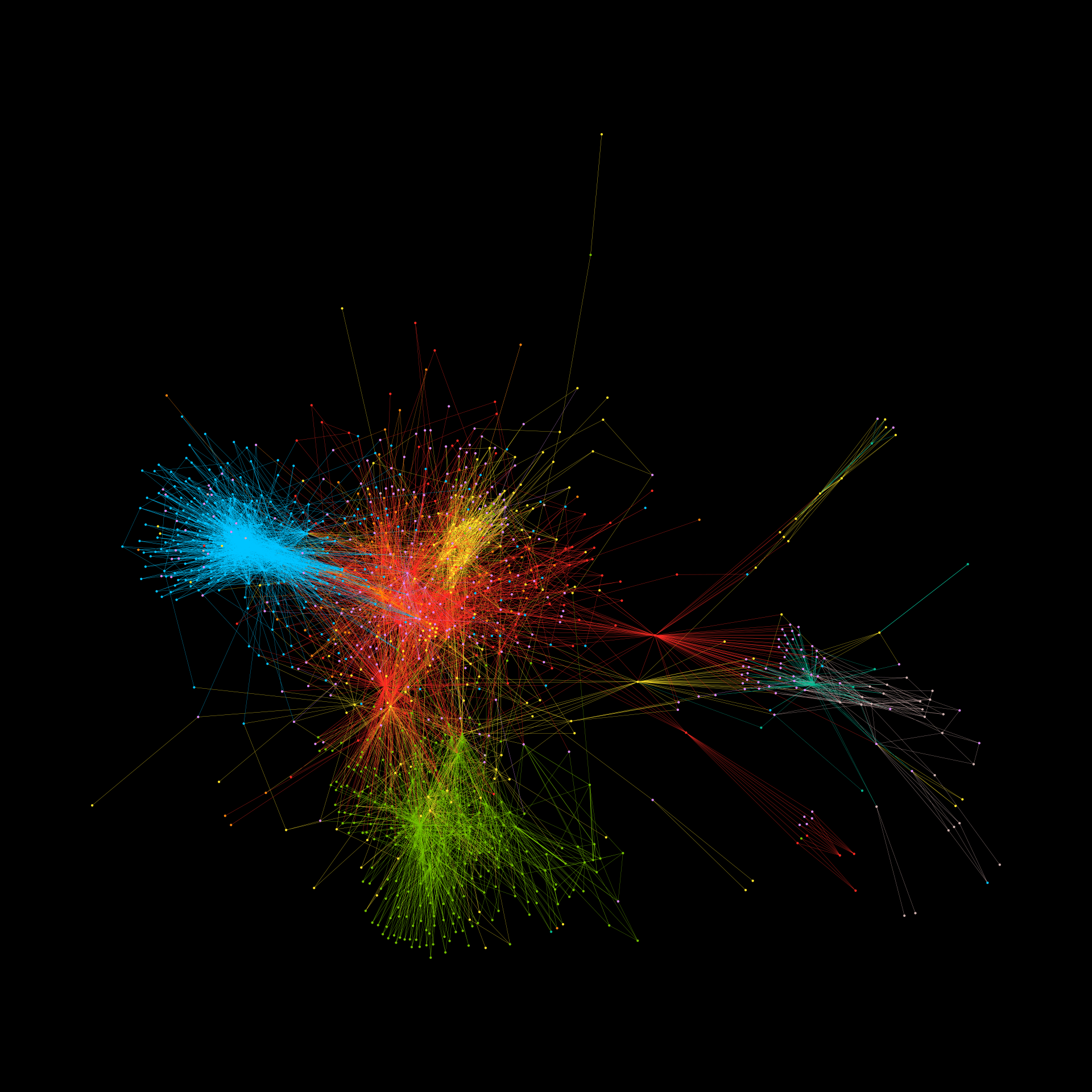

Why humans fight has no easy answer. However, understanding better how humans fight could inform future humanitarian aid planning and insight into hidden shifts for peace efforts. Here we show that an empirically-grounded physics theory of fighter dynamics — which is a generalization of the well-known physics of polymer assembly — can explain casualty patterns observed across decades of violence in a current conflict hotspot. It also suggests the possibility of future ‘super-shock’ surprise attacks that are even more lethal than have already been seen. These insights from physics open the door to new policy discussions surrounding humanitarian aid and peace efforts that account mechanistically for human violence across scales.

Frank Yingjie Huo, Dylan Restrepo, Pedro Manrique, Gordon Woo, Neil Johnson