Chaos: An Interdisciplinary Journal of Nonlinear Science

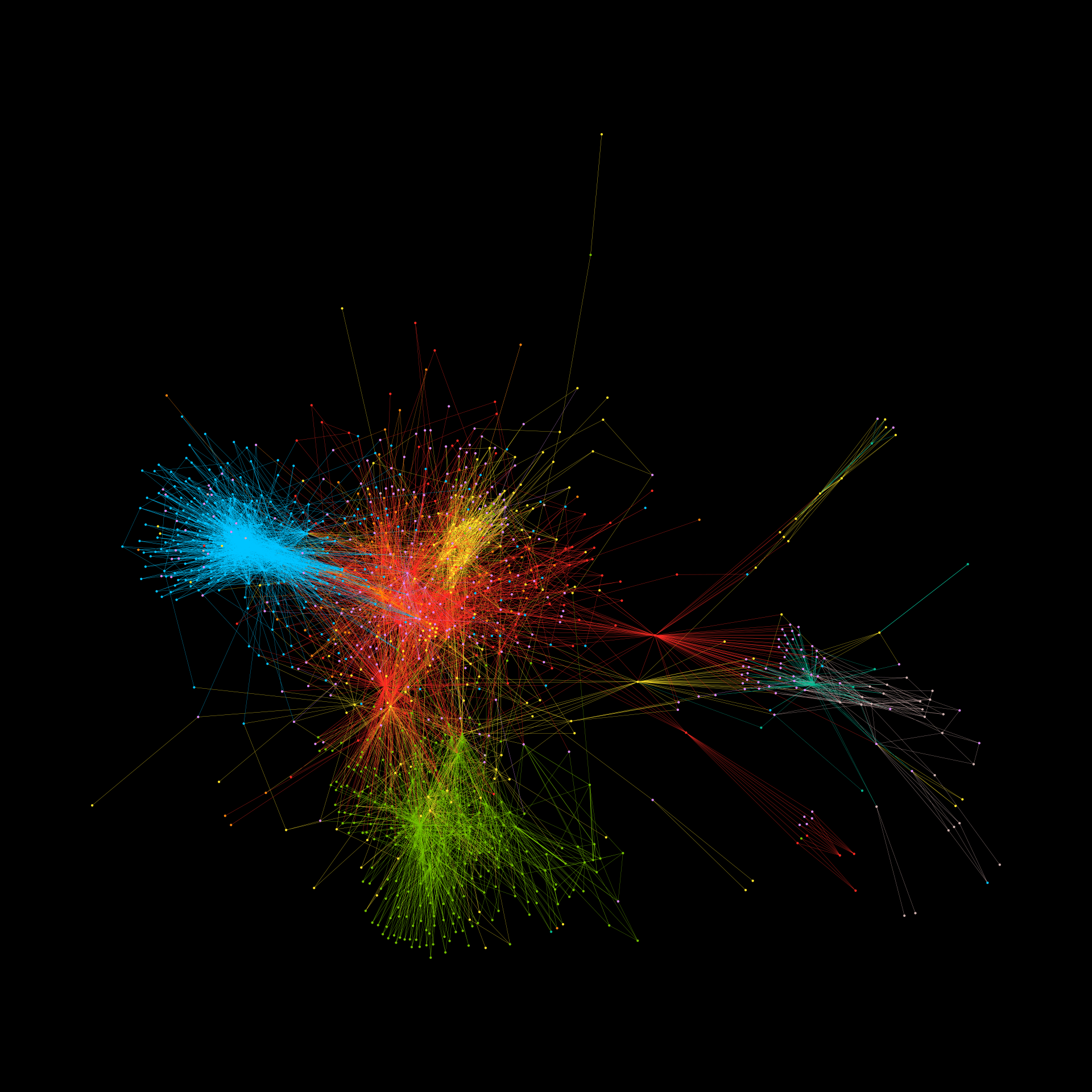

Understanding how harmful content (mis/disinformation, hate, etc.) manages to spread among online communities within and across social media platforms represents an urgent societal challenge. We develop a non-linear dynamical model for such viral spreading, which accounts for the fact that online communities dynamically interconnect across multiple social media platforms. Our mean-field theory (Effective Medium Theory) compares well to detailed numerical simulations and provides a specific analytic condition for the onset of outbreaks (i.e., system-wide spreading). Even if the infection rate is significantly lower than the recovery rate, it predicts system-wide spreading if online communities create links between them at high rates and the loss of such links (e.g., due to moderator pressure) is low. Policymakers should, therefore, account for these multi-community dynamics when shaping policies against system-wide spreading.