Physical Review Letters

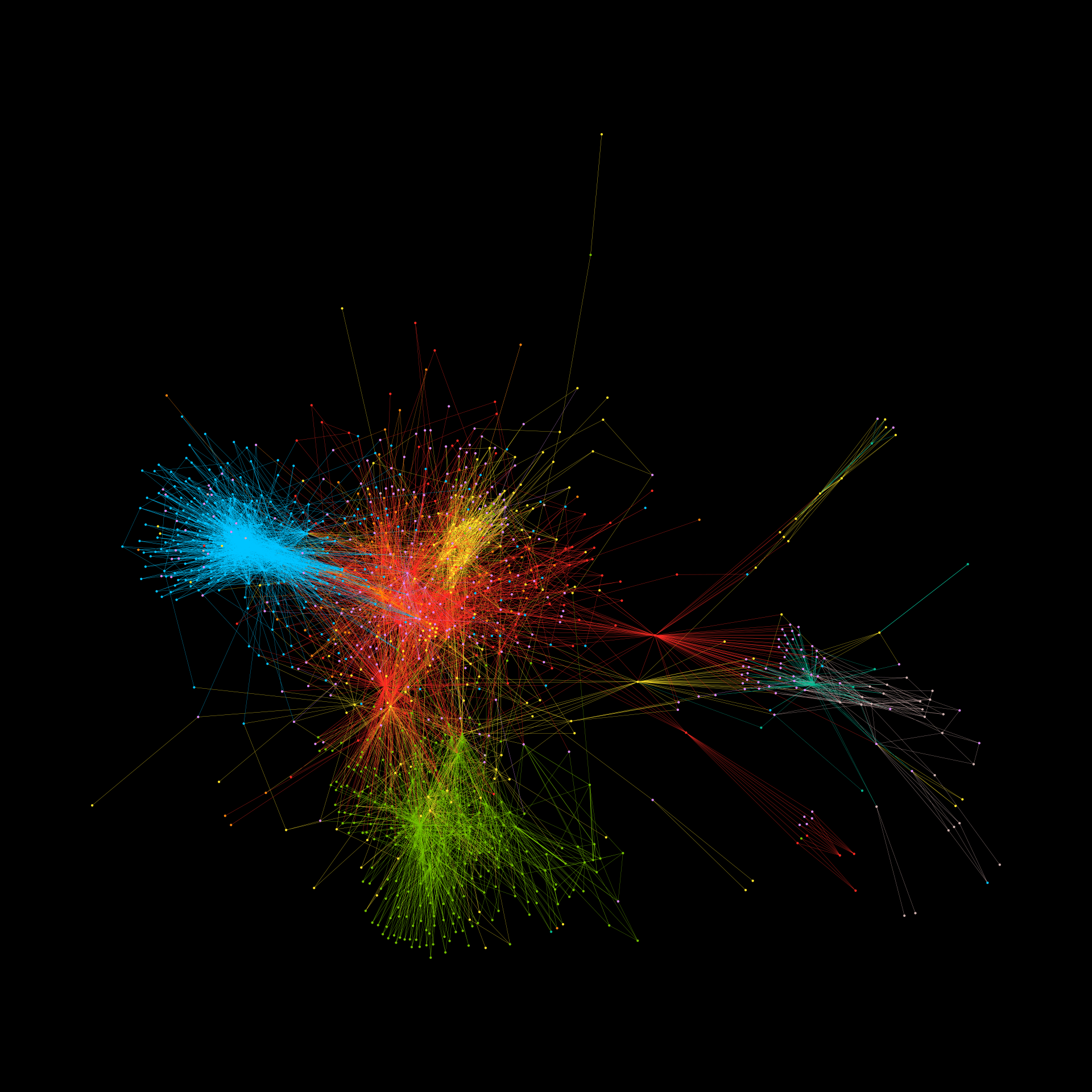

Online communities featuring “anti-X” hate and extremism, somehow thrive online despite moderator pressure. We present a first-principles theory of their dynamics, which accounts for the fact that the online population comprises diverse individuals and evolves in time. The resulting equation represents a novel generalization of nonlinear fluid physics and explains the observed behavior across scales. Its shockwavelike solutions explain how, why, and when such activity rises from “out-of-nowhere,” and show how it can be delayed, reshaped, and even prevented by adjusting the online collective chemistry. This theory and findings should also be applicable to anti-X activity in next-generation ecosystems featuring blockchain platforms and Metaverses.

Pedro Manrique, Frank Yingjie Huo, Sara El Oud, Minzhang Zheng, Lucia Illari, and Neil Johnson