Curious By Nature Podcast

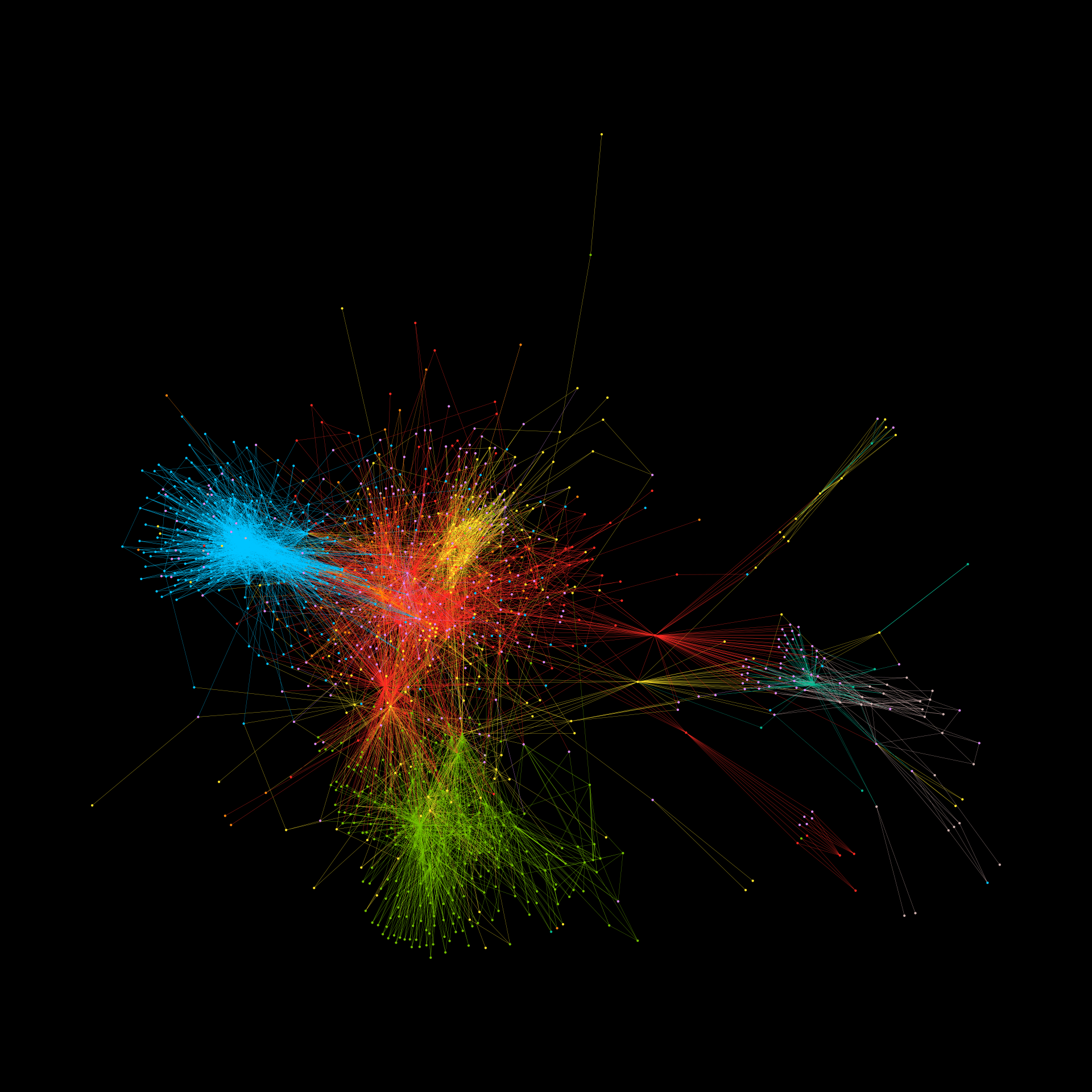

The run-up to the 2024 U.S. presidential election has seen unprecedented levels of misinformation, division, and hate speech on social media. Even as election day comes and goes and the votes are being counted, the temperature of online discourse is only likely to rise. Online conversations about race, immigration, and other hot-button topics continue to attract extremist views that threaten to drown out anything resembling civil discourse. How do communities of hate operate? And how do they create their networks of users to infiltrate both the major platforms as well as the darker corners of the web? To understand complex systems such as this, one researcher at George Washington University is using his background in particle physics to map and analyze how hate speech flows on social media. We spoke before election day and before any of the votes were counted. So, without knowing the outcome, he gives a sobering warning that the biggest spike in online hate is likely to come after voters go to the polls.