PLOS One

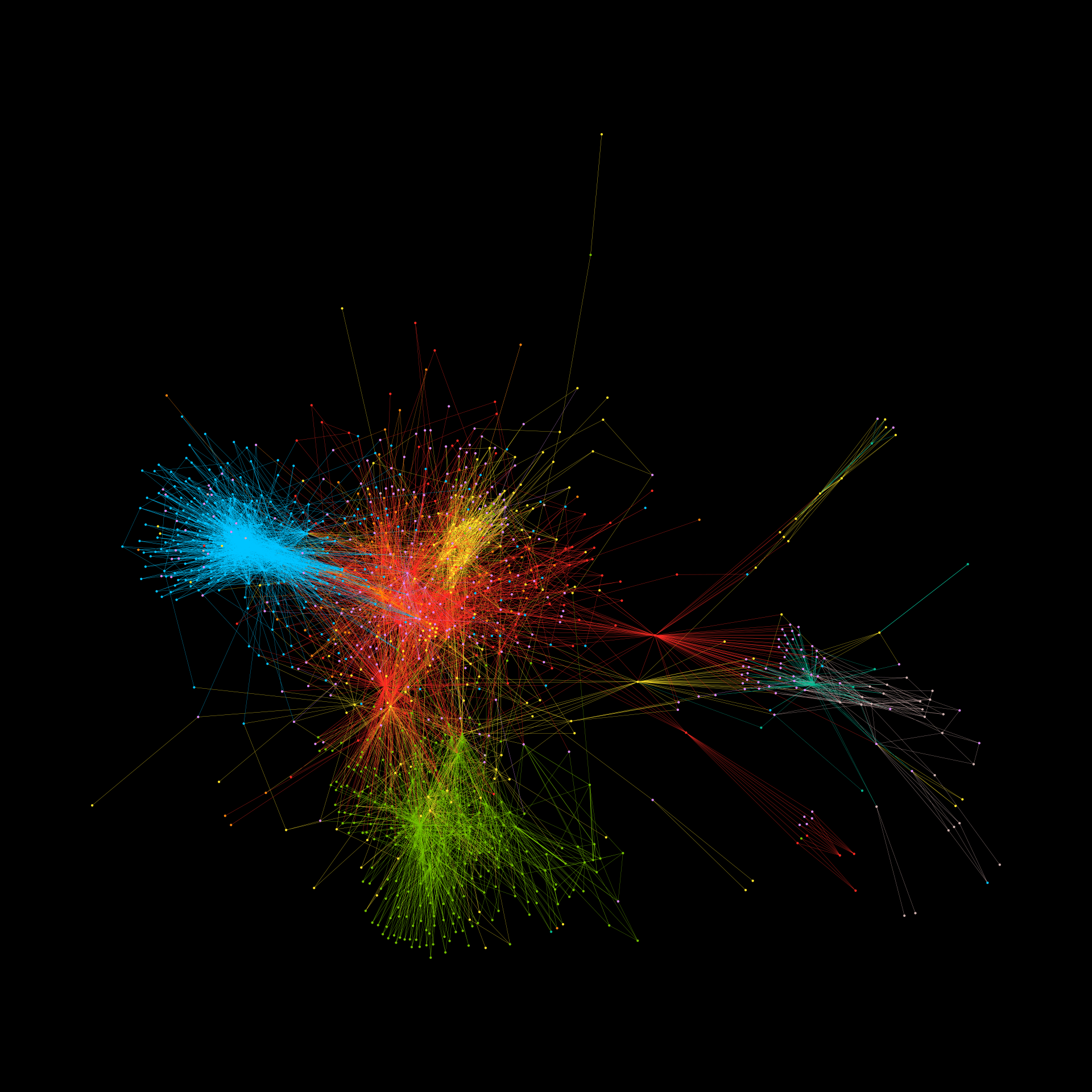

Online hate speech is a critical and worsening problem, with extremists using social media platforms to radicalize recruits and coordinate offline violent events. While much progress has been made in analyzing online hate speech, no study to date has classified multiple types of hate speech across both mainstream and fringe platforms. We conduct a supervised machine learning analysis of 7 types of online hate speech on 6 interconnected online platforms. We find that offline trigger events, such as protests and elections, are often followed by increases in types of online hate speech that bear seemingly little connection to the underlying event. This occurs on both mainstream and fringe platforms, despite moderation efforts, raising new research questions about the relationship between offline events and online speech, as well as implications for online content moderation.

Yonatan Lupu, Richard Sear, Nicolas Velásquez, Rhys Leahy, Nicholas Johnson Restrepo, Beth Goldberg, Neil Johnson