- When the cloud rains: Azure outage soaks industries worldwide

Straight Arrow News

A major outage hit Microsoft’s Azure cloud service Wednesday, impacting email and other Office 365 apps used by companies and governments worldwide. A post from the company said users may experience “latencies, timeouts and errors.”

- Why American values are changing when it comes to our health

CNN

Americans’ steadfast dedication to their beliefs can be a good thing, but it can also go too far, Dr. Jessica Steier says – like when adults yell at Girl Scouts that the cookies they’re selling are “poison.”

- AI’s Jekyll-and-Hyde Tipping Point

The Courier Express

Join PSW Science® on October 17th at 8 PM as we welcome Neil Johnson, Professor of Physics at George Washington University. In his lecture, Neil will address a pressing question: Why Do Trusted AI Systems Suddenly Turn Dangerous? During the question and answer period, in-person attendees and live stream viewers may ask the speaker questions, and in-person attendees may also engage with the speaker during the post-lecture reception.

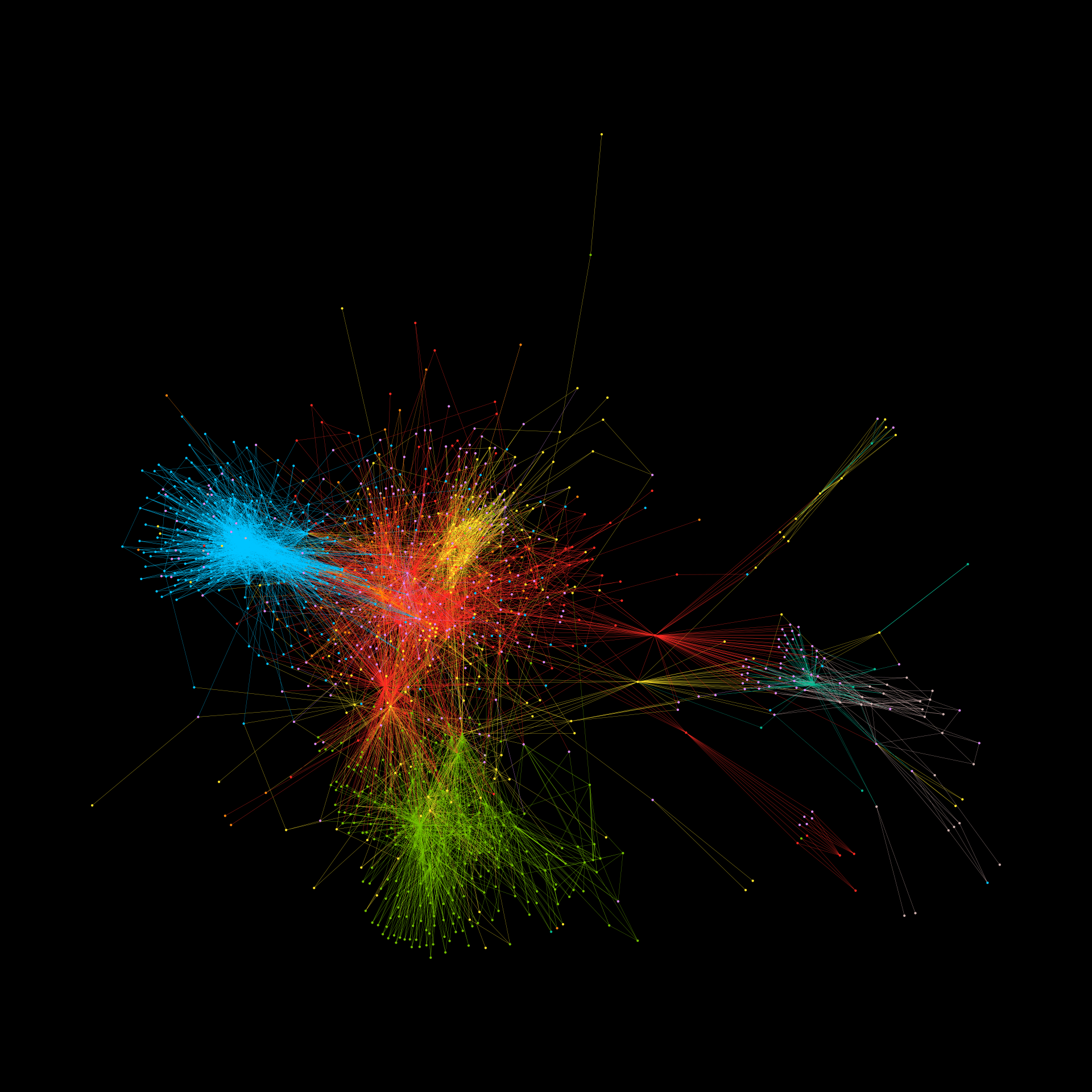

- The Physics of AI Hallucination: New Research Reveals the Tipping Point for Large Language Models

First Principles

Physicist Neil Johnson has mapped the exact moment AI can flip from accurate to false, and he says understanding their underlying physics could be the key to safer systems.

- Managing the Trust-Risk Equation in AI: Predicting Hallucinations Before They Strike

Security Week

New physics-based research suggests large language models could predict when their own answers are about to go wrong — a potential game changer for trust, risk, and security in AI-driven systems.

- Scrutiny of the Vaccine Injury Compensation Program

Newswise

Newswise — Health Secretary Robert F. Kennedy Jr.’s push to overhaul the Vaccine Injury Compensation Program is stirring debate over vaccine safety and trust in public health. Experts warn his rhetoric could fuel misinformation and undermine confidence in vaccines at a time when hesitancy is already at historic highs.

- Here’s why saying ‘please’ and ‘thank you’ costs 158,000,000 bottles of water a day

Metro UK

‘I wonder how much money OpenAI has lost in electricity costs from people saying “please” and “thank you” to their models.’

- Multispin Physics of AI Tipping Points and Hallucinations (AI Podcast)

Daily Papers AI

Today’s paper: Multispin Physics of AI Tipping Points and Hallucinations

- Should We Trust AI? Three Approaches to AI Fallibility

Security Week

The promise of agentic AI is compelling: increased operational speed, increased automation, and lower operational costs. But have we ever paused to seriously ask the question: can we trust this thing?

- “Big Sunscreen”: When Misinformation Fuels Extremist Conspiracy Theories

Beauty Matter

A broad spectrum of “sunscreen truthers” on platforms like TikTok, Instagram, and Facebook have peddled the trope that sunscreen causes cancer for at least the last decade. These types of conspiracy theories have reached a fever pitch on social media since the pandemic. From vegan anti-vaxxers and bro-biohackers to MAHA and QAnon supporters, they all have two things in common: a case of chemophobia and a belief that sunscreen is the enemy.