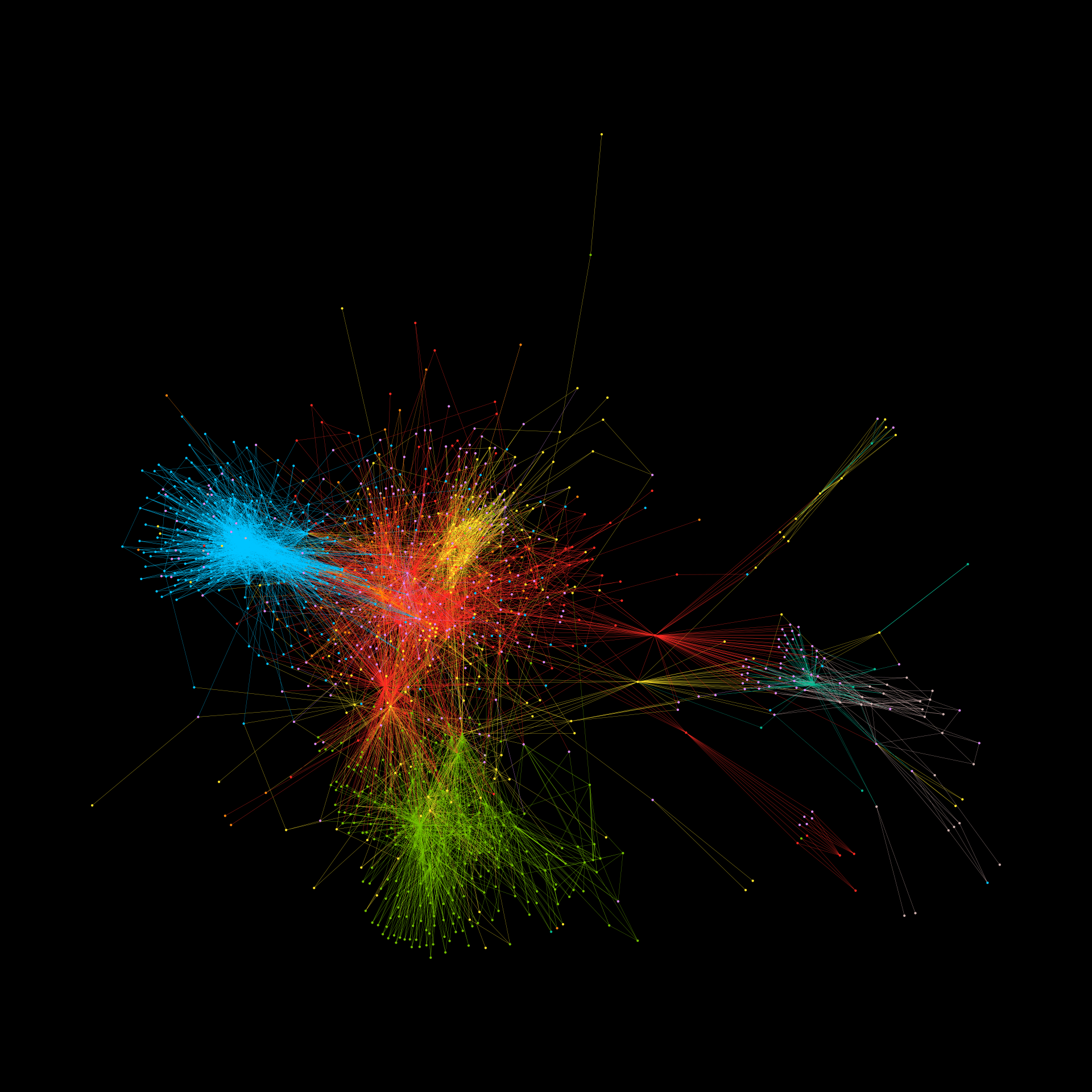

Calls are escalating for social media platforms to do more to mitigate extreme online communities whose views can lead to real-world harms, e.g., mis/disinformation and distrust that increased Covid-19 fatalities, and now extend to monkeypox, unsafe baby formula alternatives, cancer, abortions, and climate change; white replacement that inspired the 2022 Buffalo shooter and will likely inspire others; anger that threatens elections, e.g., 2021 U.S. Capitol attack; notions of male supremacy that encourage abuse of women; anti-Semitism, anti-LGBQT hate and QAnon conspiracies. But should ‘doing more’ mean doing more of the same, or something different? If so, what? Here we start by showing why platforms doing more of the same will not solve the problem. Specifically, our analysis of nearly 100 million Facebook users entangled over vaccines and now Covid and beyond, shows that the extreme communities’ ecology has a hidden resilience to Facebook’s removal interventions; that Facebook’s messaging interventions are missing key audience sectors and getting ridiculed; that a key piece of these online extremes’ narratives is being mislabeled as incorrect science; and that the threat of censorship is inciting the creation of parallel presences on other platforms with potentially broader audiences. We then demonstrate empirically a new solution that can soften online extremes organically without having to censor or remove communities or their content, or check or correct facts, or promote any preventative messaging, or seek a consensus. This solution can be automated at scale across social media platforms quickly and with minimal cost.

Elvira Maria Restrepo, Martin Moreno, Lucia Illari, Neil F. Johnson